Ranking and Matchmaking

The TrueSkill ranking system is a skill based ranking system for Xbox Live developed at Microsoft Research. The purpose of a ranking system is to both identify and track the skills of gamers in a game (mode) in order to be able to match them into competitive matches. The TrueSkill ranking system only uses the final standings of all teams in a game in order to update the skill estimates (ranks) of all gamers playing in this game. Ranking systems have been proposed for many sports but possibly the most prominent ranking system in use today is ELO. What makes TrueSkill unique is that it takes a fully Bayesian treatment of the problem using approximate message passing as the inference scheme (namely, Expectation Propagation). Using this approach, it is not only possible to fully quantify the uncertainty in every gamer’s skill at each point in time but also to link skills together through time and compare the relative strength of players across centuries,

TrueSkill Through Time: Revisiting the History of Chess Proceedings Article

In: Advances in Neural Information Processing Systems 20, pp. 931–938, The MIT Press, 2007.

TrueSkill(TM): A Bayesian Skill Rating System Proceedings Article

In: Advances in Neural Information Procesing Systems 19, pp. 569–576, The MIT Press, 2006.

Ranking and Matchmaking Journal Article

In: Game Developer Magazine, no. 10, 2006.

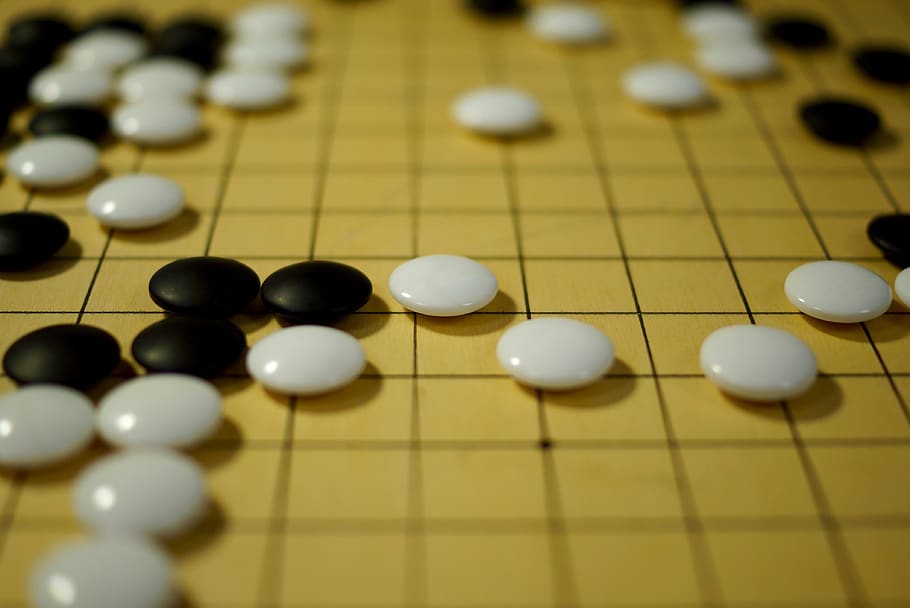

Computer Go

The game of Go is an ancient Chinese game of strategy for two players. By most measures of complexity it is more complex than Chess. While Deep Blue (and more recently Deep Fritz) play Chess at the world champion’s level, only recently DeepMind has demonstrated in a system called AlphaGo that Go-playing program can beat the best human players. The reason that it took over 20 years to replicate the successes of DeepBlue for the game of Go appear to lie in its greater complexity, both in terms of the number of different positions and in the difficulty of defining an appropriate evaluation function for Go positions.

We have worked on automated ways of acquiring Go knowledge using machine learning to radically improve on these two problems. Numerous Go servers in the internet offer thousands of game records of Go played by players that are very competent as compared to today’s computer Go programs. The great challenge is to build machine learning algorithms that extract knowledge from these data-bases such that it can be used for playing Go well.

Learning to Solve Game Trees Proceedings Article

In: Proceedings of the 24th International Conference on Machine Learning, pp. 839–846, 2007.

Bayesian Pattern Ranking for Move Prediction in the Game of Go Proceedings Article

In: Proceedings of the 23rd International Conference on Machine Learning, pp. 873–880, 2006.

Learning on Graphs in the Game of Go Proceedings Article

In: Proceedings of the International Conference on Artifical Neural Networks, pp. 347–352, 2001.

Learning to Fight

We apply reinforcement learning to the problem of finding good policies for a fighting agent in a commercial computer game. The learning agent is trained using the SARSA algorithm for on-policy learning of an action-value function represented by linear and neural network function approximators. Importance aspects include the selection and construction of features, actions, and rewards as well as other design choices necessary to integrate the learning process into the game. The learning agent is trained against the built-in AI of the game with different rewards encouraging aggressive or defensive behaviour.

Learning to Fight Proceedings Article

In: Proceedings of the International Conference on Computer Games: Artificial Intelligence, Design and Education, pp. 193–200, 2004.

Learning to Race

Drivatars are a novel form of learning artificial intelligence (AI) developed for Forza Motorsport. The technology behind the Drivatar concept is exploited within the game in two ways:

- as an innovative new learning game feature: create your own AI driver!

- as the underlying model for all the AI competitors in the Arcade and Career modes.

Our original goal in developing Drivatars was to create human-like computer opponents to race against. For more details please visit the Drivatar project page.

Social Relationships in First-Person Shooter Games

Online video games can be seen as medium for the formation and maintenance of social relationships. We explore what social relationships mean under the context of online First-Person Shooter (FPS) games, how these relationships influence game experience, and how players manage them. We combine qualitative interview and quantitative game log data, and find that despite the gap between the non-persistent game world and potentially persistent social relationships, a diversity of social relationships emerge and play a central role in the enjoyment of online FPS games. We report the forms, development, and impact of such relationships, and discuss our findings in light of design implications and comparison with other game genres.

Sociable Killers: Understanding Social Relationships in an Online First-Person Shooter Game Proceedings Article

In: Proceedings of the 2011 ACM Conference on Computer Supported Cooperative Work, pp. 197–206, 2011.